Case Study: Re-thinking Alert Banners When “Everything” Is Breaking News

Method

Process Overview

We conducted usability testing to uncover why important announcements were being overlooked on websites using the GovHub content management system. Instead of relying on surveys, which would unnaturally direct user attention to the Alerts feature, we replicated real-world browsing conditions. We did this by directing the user’s attention to completing a task and asking what they noticed about the Alert on the page afterwards. This allowed us to capture authentic user behavior and determine whether design changes could make Alerts more noticeable without annoying repeat visitors.

Research

What was the issue to be solved?

Agencies needed a reliable way to share time-sensitive information, such as unexpected office closures or fraud alerts. However, key problems emerged, especially when agencies decided to stack more than one alert on top of another.

Banner blindness was causing users, especially repeat visitors, to scroll past Alerts without noticing them.

The existing Alert design blended too closely with the website’s color palette, making it visually easy to ignore.

Longer Alerts pushed primary site content further down, frustrating users who came for other tasks.

Questions to be solved:

Would a smaller, less intrusive Alert still be noticed?

If noticed, would users understand and remember its content?

Could we make Alerts prominent without irritating frequent visitors if agencies stacked them?

Testing

Multi-variate Testing

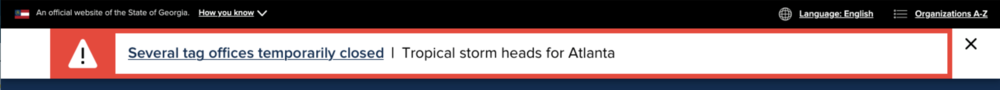

We designed four unique Alert styles, ensuring each participant saw only one. To stimulate a natural browsing flow, instead of using a survey, we gave them:

A Primary Task (Unrelated to Alerts)

Participants viewed a mock agency website and were asked to located information on specialty license plate styles.

This initial task intentionally shifted their focus away from Alerts to mimic real-world browsing patterns.

Follow-Up Questions:

Did they notice an Alert on the page?

Could they describe what the Alert looked like?

Could users tell us what the Alert was about in their own words?

Were users able to select the correct Alert content from multiple-choice options?

This method helped measure true visibility, recall, and comprehension of the Alerts without priming users to look for them.

Results: Key Takeaways

What we learned

Color Contrast Matters

Alerts with a bright red or orange outline stood out more than those matching the site’s main color palette.

Solid-colored backgrounds performed worse than outlined designs with light interiors.

Size & Shape Influence Perception

Thinner Alerts with a single line of text (and a link) were more effective.

Larger, paragraph-style banners that resembled traditional banner advertisements were more likely to be ignored.

User Behavior Confirmation

Existing Alert design colors were too visually cohesive with the rest of the page, leading to missed content, especially when multiple Alerts pushed main content below the fold.

Usability Testing Impact

The new outlined, thinner Alert style, in new colors, improved noticeability and comprehension without frustrating frequent visitors.

Lessons Learned

Always be evolving

For Alerts, especially, a design aesthetic should complement usability, even if it means breaking with the site’s main palette. Most importantly, human behavior evolves over time. And items generally accepted as meeting UX standards should be re-examined and not taken for granted, to make sure they also evolve with that behavior.